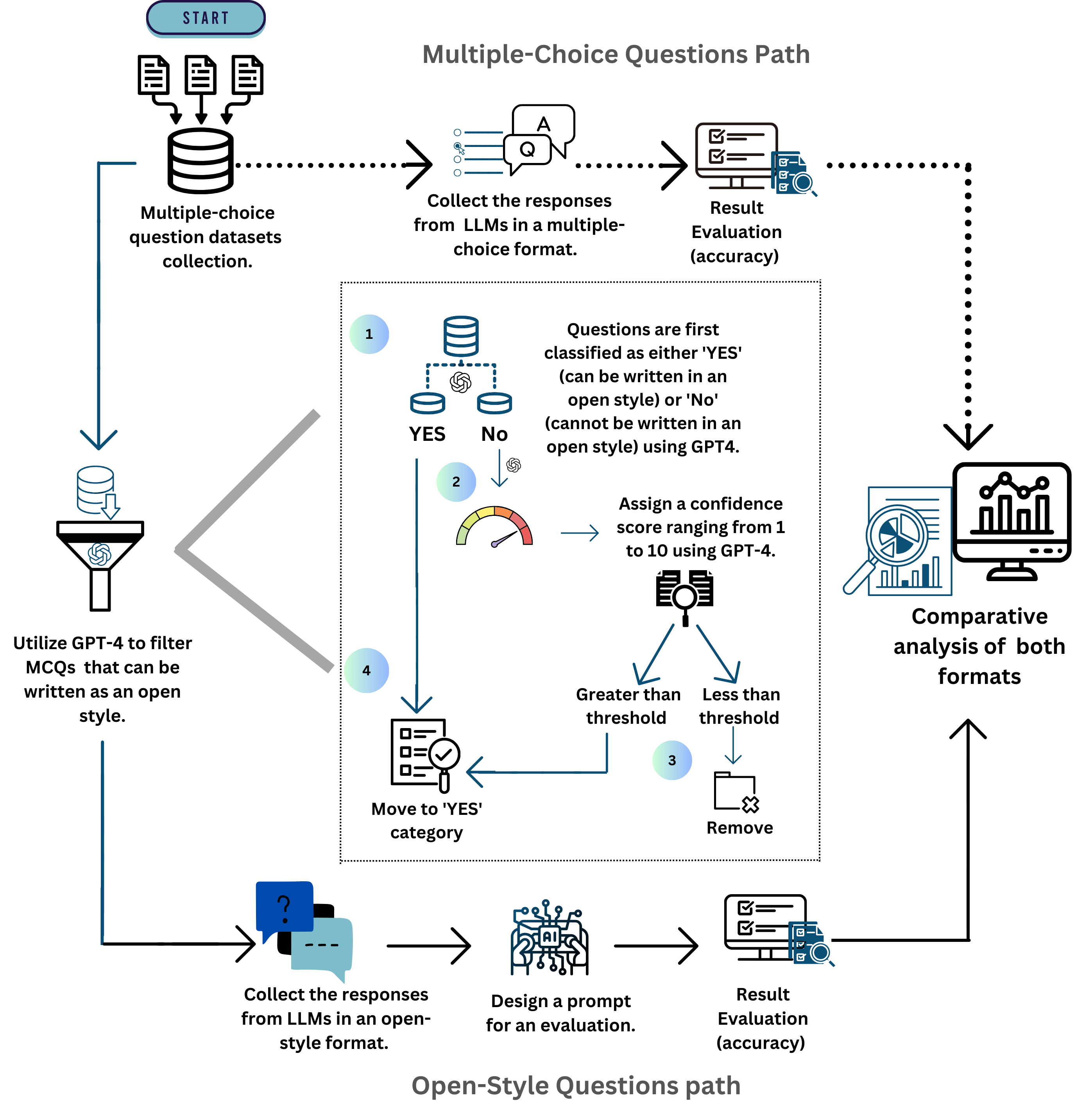

Large language models (LLMs) excel at various natural language processing tasks but need robust evaluation strategies to assess their performance accurately. Traditionally, MCQs have been used for this purpose. However, they are prone to selection bias and random guessing. This paper presents a new approach by transitioning from MCQs to open-style questions, aiming to provide a more accurate assessment of LLM capabilities. We introduce both the Open-LLM-Leaderboard and a new benchmark to evaluate and compare the performance of different LLMs.

Multiple-choice questions (MCQs) are frequently used to assess large language models (LLMs). Unfortunately, MCQs can lead to biases due to inherent unbalanced probabilities influencing predictions. Our research introduces a new benchmark through entirely open-style questions, shifting away from MCQs to better reflect true LLM capabilities.

To fundamentally eliminate selection bias and random guessing in LLMs, in this work, we build an open-style question benchmark for LLM evaluation. Leveraging this benchmark, we present the Open-LLM-Leaderboard, a new automated framework designed to refine the assessment process of LLMs.

Open-style questions require models to generate answers without being constrained by predetermined choices, aiming to assess the model’s ability to generate coherent and contextually appropriate responses. This approach helps avoid selection bias and random guessing inherent in MCQs.

@article{myrzakhan2024openllmleaderboard,

title={Open-LLM-Leaderboard: From Multi-choice to Open-style Questions for LLMs Evaluation, Benchmark, and Arena},

author={Aidar Myrzakhan, Sondos Mahmoud Bsharat, Zhiqiang Shen},

journal={arXiv preprint arXiv:2406.07545},

year={2024},

}